Data Science Weekly - Issue 545

Curated news, articles and jobs related to Data Science, AI, & Machine Learning

Issue #545

April 18, 2024

Hello!

Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

Editor's Picks

WildChat: 1M ChatGPT Interaction Logs in the Wild

The WildChat Dataset is a corpus of 1 million real-world user-ChatGPT interactions, characterized by a wide range of languages and a diversity of user prompts. It was constructed by offering free access to ChatGPT and GPT-4 in exchange for consensual chat history collection. Using this dataset, we finetuned Meta's Llama-2 and created WildLlama-7b-user-assistant, a chatbot which is able to predict both user prompts and assistant responses…

What's the most practical thing you have done with ai? [Reddit]

I'm curious to see what people have done with current ai tools that you would consider practical. Past the standard image generating and simple question answer prompts what have you done with ai that has been genuinely useful to you? Mine for example is creating a ui which let's you select a country, start year and end year aswell as an interval of months or years and when you hit send a series of prompts are sent to ollama asking it to provide a detailed description of what happened during that time period in that country, then saves all output to text files for me to read. Very useful to find interesting history topics to learn more about and lookup…Patterns and anti-patterns of data analysis reuse

A speed-run through four stages of data analysis reuse, to the end game you probably guessed was coming…Every data analysis / data scientist role I’ve worked in has had a strong theme of redoing variations of the same analysis. I expect this is something of an industry-wide trend…your sanity now depends on re-using as much work as possible across these achingly-samey-but-subtlety-different projects. You and your team need to set yourselves up so you can re-cover old ground quickly, hopefully ensuring that you have plenty of time to build on capabilities you’ve already proven, rather than just executing and re-executing the same capability until you all get bored and quit…So now lets look at the ways you can reuse your data analysis work…

A Message from this week's Sponsor:

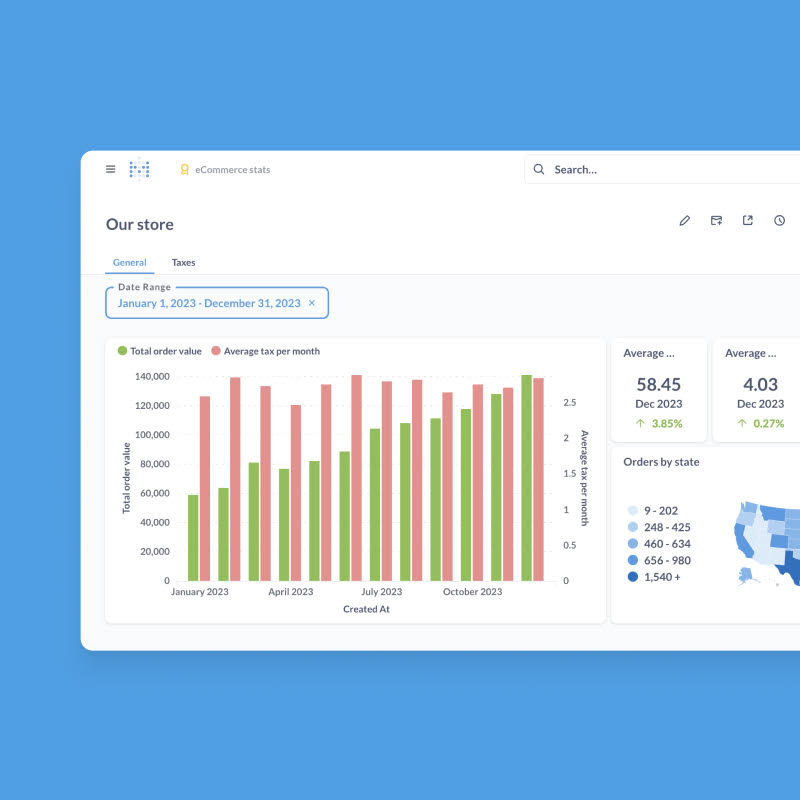

Get easy-to-use business intelligence for your startup

Metabase’s intuitive BI tools empower your team to effortlessly report and derive insights from your data. Compatible with your existing data stack, Metabase offers both self-hosted and cloud-hosted (SOC 2 Type II compliant) options. In just minutes, most teams connect to their database or data warehouse and start building dashboards—no SQL required. With a free trial and super affordable plans, it's the go-to choice for venture-backed startups and over 50,000 organizations of all sizes. Empower your entire team with Metabase. Read more.

* Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Data Science Articles & Videos

I'm writing a new vector search SQLite Extension

I'm working on a new SQLite extension! It's called sqlite-vec, an extension for vector search, written purely in C. It's meant to replace sqlite-vss, another vector search SQLite extension I released in February 2023, which has a number of problems. I believe the approach I'm taking with sqlite-vec solves a number of problem it's predecessor has, will have a much nicer and performant SQL API, and is a better fit for all applications who want an embed vector search solution!…How to Beat Proprietary LLMs With Smaller Open Source Models

Building your AI applications around open source models can make them better, cheaper, and faster…In this article, we explore the unique advantages of open source LLMs, and how you can leverage them to develop AI applications that are not just cheaper and faster than proprietary LLMs, but better too…Hacking on PostgreSQL is Really Hard

Hacking on PostgreSQL is really hard. I think a lot of people would agree with this statement, not all for the same reasons. Some might point to the character of discourse on the mailing list, others to the shortage of patch reviewers, and others still to the difficulty of getting the attention of a committer, or of feeling like a hostage to some committer's whimsy. All of these are problems, but today I want to focus on the purely technical aspect of the problem: the extreme difficulty of writing reasonably correct patches…Styling a River Network with Expressions (QGIS3)

In the previous tutorial Creating a Block World Map (QGIS3) we used expressions for scaling values and apply a color ramp. We build on those concepts and learn how to use expressions to visualize rivers in a popular style. Note: This tutorial focuses on the use of expressions for styling. You can check out our another tutorial Creating a Colorized River Basin Map (QGIS3) that creates a different version of the map shown here using a tools-based workflow…We will use expressions to filter and style South American rivers - with line widths representing upland area and colors representing basin id from HydroRIVERS…Testing percentiles

Here’s another installment in Data Q&A: Answering the real questions with Python…Here’s a question from the Reddit statistics forum. I have two different samples (about 100 observations per sample) drawn from the same population (or that’s what I hypothesize; the populations may in fact be different). The samples and population are approximately normal in distribution. I want to estimate the 85th percentile value for both samples, and then see if there is a statistically significant difference between these two values. I cannot use a normal z- or t-test for this, can I? It’s my current understanding that those tests would only work if I were comparing the means of the samples. As an extension of this, say I wanted to compare one of these 85th percentile values to a fixed value; again, if I was looking at the mean, I would just construct a confidence interval and see if the fixed value fell within it…but the percentile stuff is throwing me for a loop…Black Box Reductions: Constrained Online Learning

This is the first post on a new topic: how to reduce one online learning problem into another in a black-box way. That is, we will use an online convex optimization algorithm to solve a problem different from what it was meant to solve, without looking at its internal working in any way. The only thing we will change will be the input we pass to the algorithm. Why doing it? Because you might have optimization software that you cannot modify or just because designing and analyzing an online learning algorithm in one case might be easier…Here, I’ll explain how to deal with constraints in online convex optimization in a black box way, and as a bonus, I’ll show how to easily prove the regret guarantee of the Regret Matching+ algorithm…Causal machine learning for predicting treatment outcomes

Causal machine learning (ML) offers flexible, data-driven methods for predicting treatment outcomes including efficacy and toxicity, thereby supporting the assessment and safety of drugs. A key benefit of causal ML is that it allows for estimating individualized treatment effects, so that clinical decision-making can be personalized to individual patient profiles…In this Perspective, we discuss the benefits of causal ML (relative to traditional statistical or ML approaches) and outline the key components and steps. Finally, we provide recommendations for the reliable use of causal ML and effective translation into the clinic…Best CUDA Course - Intro to Parallel Programming

The best CUDA intro course by nvidia with 460 bite sized videos. It was the course released with Udacity 9 yrs ago. It is kinda old, but you can grasp core ideas around it…Fixing DPO but I have a dinner reservation

Direct preference optimization (DPO; https://arxiv.org/abs/2305.18290) is all the rage, i heard. i also hear from my students that DPO, which minimizes the following loss, often results in weird behaviours, such as unreasonable preference toward lengthy responses (even when there is no statistical difference in lengths between desirable and undesirable responses.) i won’t go into details of these issues, but i feel like there’s a relatively simple reason behind these pathologies based on basic calculus…

KRAGEN - Software to implement GoT with a weviate vectorized database

Knowledge Retrieval Augmented Generation ENgine is a tool that combines knowledge graphs, Retrieval Augmented Generation (RAG), and advanced prompting techniques to solve complex problems with natural language. KRAGEN converts knowledge graphs into a vector database and uses RAG to retrieve relevant facts from it. KRAGEN uses advanced prompting techniques: namely graph-of-thoughts (GoT), to dynamically break down a complex problem into smaller subproblems, and proceeds to solve each subproblem by using the relevant knowledge through the RAG framework, which limits the hallucinations, and finally, consolidates the subproblems and provides a solution…High Agency Pydantic > VC Backed Frameworks — with Jason Liu of Instructor

Structured output vs function calling, state of the AI engineering stack, when to NOT raise venture capital, and career advice for aspiring AI Engineers…Scaling Up “Vibe Checks” for LLMs - Shreya Shankar | Stanford MLSys #97

Large language models (LLMs) are increasingly being used to write custom pipelines that repeatedly process or generate data of some sort. Despite their usefulness, LLM pipelines often produce errors, typically identified through manual “vibe checks” by developers. This talk explores automating this process using evaluation assistants, presenting a method for automatically generating assertions and an interface to help developers iterate on assertion sets. We share takeaways from a deployment with LangChain, where we auto-generated assertions for 2000+ real-world LLM pipelines. Finally, we discuss insights from a qualitative study of how 9 engineers use evaluation assistants: we highlight the subjective nature of "good" assertions and how they adapt over time with changes in prompts, data, LLMs, and pipeline components…

Training & Resources

PySpark Course: Big Data Handling with Python and Apache Spark

PySpark, the Python API for Apache Spark, empowers data engineers, data scientists, and analysts to process and analyze massive datasets efficiently. In this course, you'll dive deep into the fundamentals of PySpark, learning how to harness the combined power of Python and Apache Spark to handle big data challenges with ease. From data manipulation and transformations to advanced analytics and machine learning, you'll gain hands-on experience in this course…

Mastering GDAL Tools (Full Course Material)

A practical hands-on introduction to GDAL and OGR command-line programs…GDAL is an open-source library for raster and vector geospatial data formats. The library comes with a vast collection of utility programs that can perform many geoprocessing tasks. This class introduces GDAL and OGR utilities with example workflows for processing raster and vector data. The class also shows how to use these utility programs to build Spatial ETL pipelines and do batch processing…A transformer walk-through, with Gemma

Transformer-based LLMs seem mysterious, but they don’t need to. In this post, we’ll walk through a modern transformer LLM, Google’s Gemma, providing bare-bones PyTorch code and some intuition for why each step is there. If you’re a programmer and casual ML enthusiast, this is written for you…Our problem is single-step prediction: take a string e.g. “I want to move”, and use an already-trained language model (LM) to predict what could come next. This is the core component of chatbots, coding assistants, etc, since we can easily chain these predictions together to generate long strings. We’ll walk through this example using Gemma 2B, with an accompanying notebook which provides unadorned code for everything we’ll see…

Last Week's Newsletter's 3 Most Clicked Links

* Based on unique clicks.

** Find last week's issue #544 here.

Cutting Room Floor

Scaling hierarchical agglomerative clustering to trillion-edge graphs

Matryoshka Representation Learning and Adaptive Semantic Search

SUQL: Conversational Search over Structured and Unstructured Data with Large Language Models

Shifting Tides: The Competitive Edge of Open Source LLMs over Closed Source LLMs

How DSPy optimizers work — a Q&A part of the talk not recorded by the venue!

Whenever you're ready, 2 ways we can help:

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.Promote yourself/organization to ~61,500 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :)

Stay Data Science-y!

All our best,

Hannah & Sebastian